One of the worst things to sample for any brute-force ray tracer are specular highlights / reflections with a low roughness in motion blur. Even worse so on fine displacements or bump. And EVEN more worse with lots of small highlights. When all of these things come together sampling these highlights in motionblur is going to become really hard and with conventional methods you will end up having to rely on extemely high AA samples and even then the highlight-streaks will most likely still be dotty… And you won’t make any friends if they have to paint these streaks smooth in Comp :) So during the crunch time of a recent project I was brain storming with some of my collegues how this could potentially be fixed without needing too much samples and I’ve been working on implementing that idea which seems to work quite nicely.

If you try to sample this speedy fella in an environment like that with this look you will inevitably end up with streaky specs.

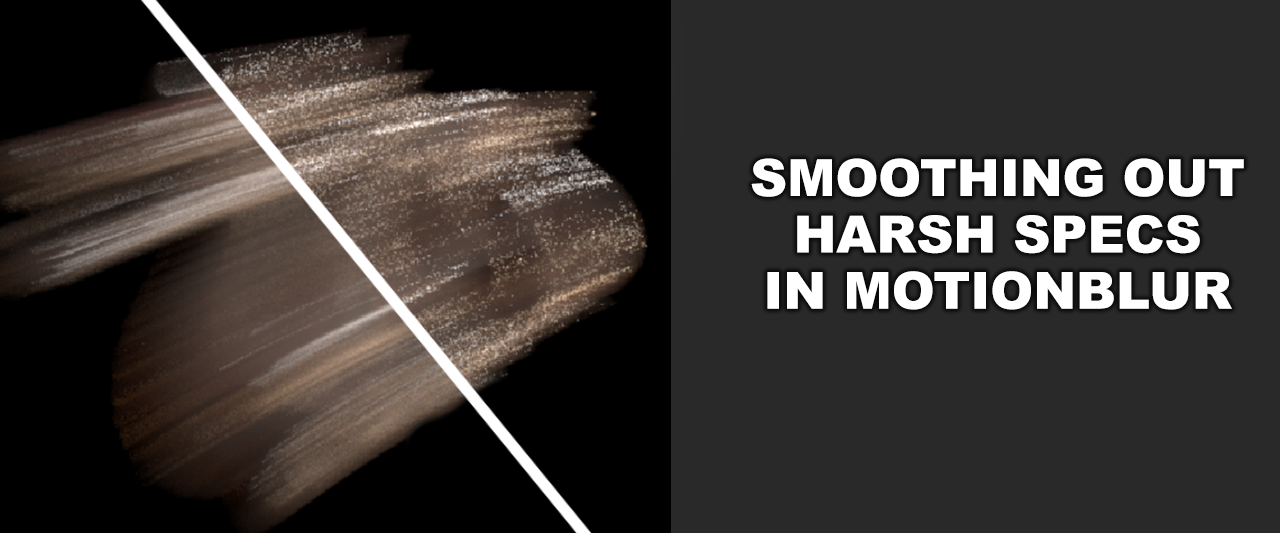

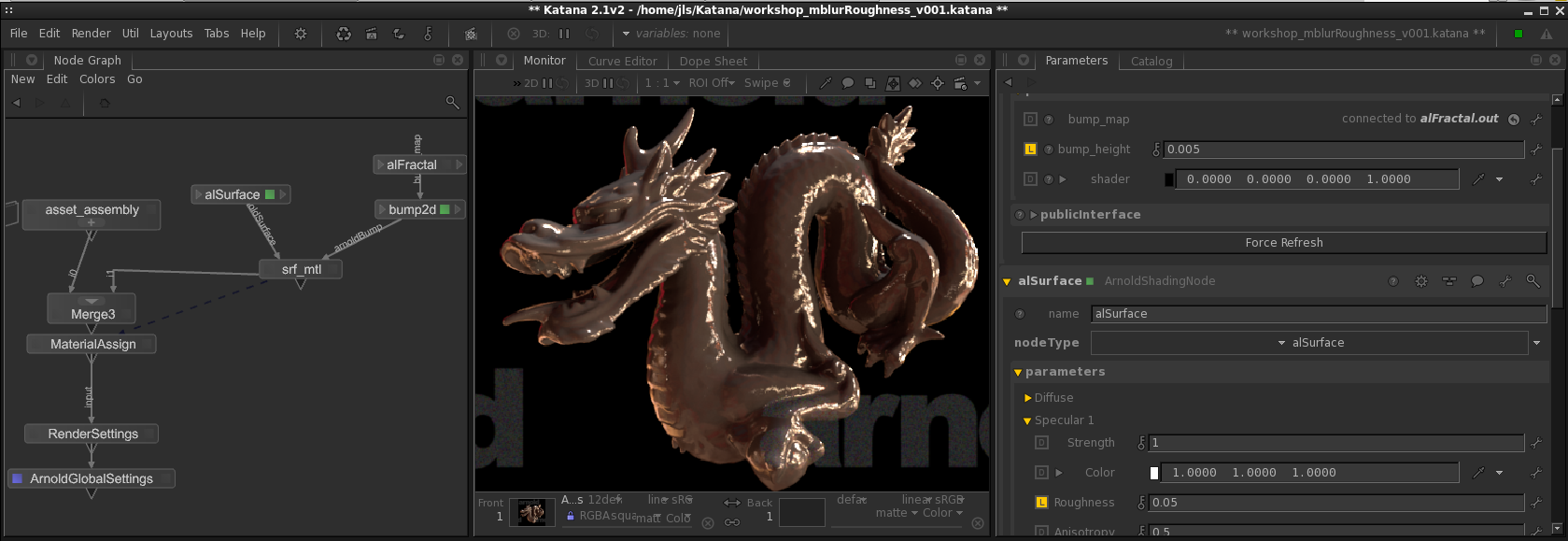

So how do you fix it without changing the look? Let’s say you have a simple scene like the following:

In this case I’m using Arnold, but the base concept is the same with any renderer. Low roughness on the specs + bumpy surface + lots of harsh highlights result in something like this when you turn on motionblur:

This one already has 12 AA samples + 2 light samples. It won’t get much smoother just cranking up the samples, but trust me it will render longer :) One way to smooth the streaks out however would be to increase the roughness and decrease the bump / displacement.

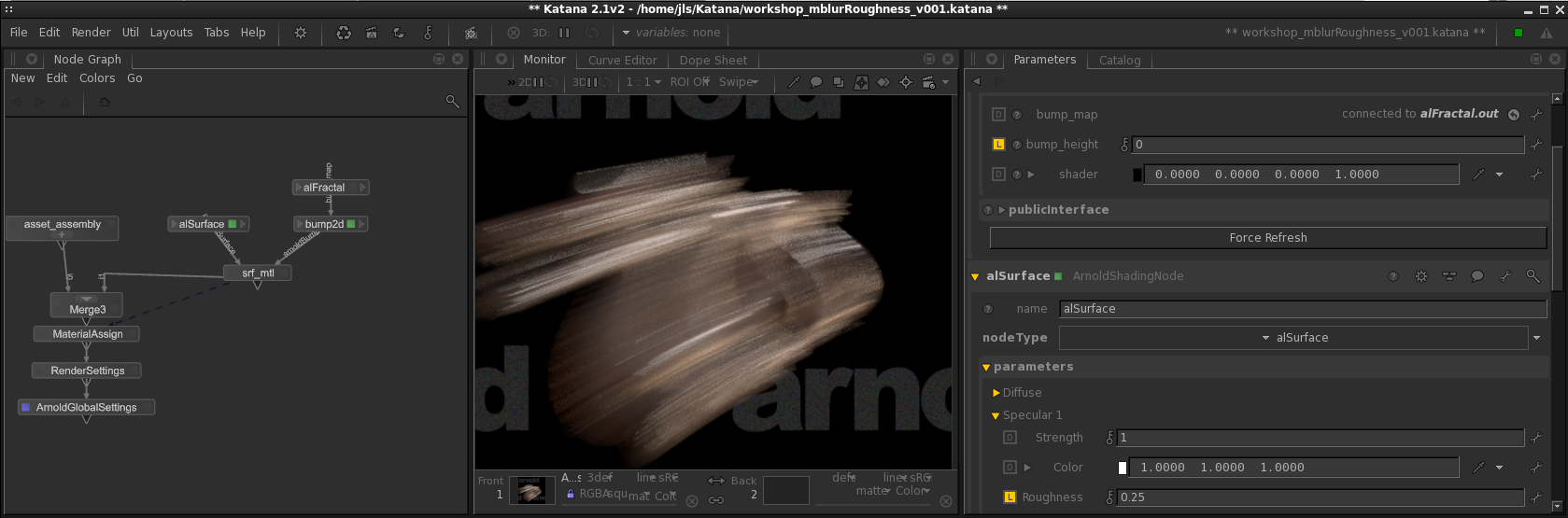

That looks way better. However the problem with this is that these adjustments will affect the look a lot and the asset will look very different in non-motionblurred frames. So let’s make it work only when the character is in motionblur.

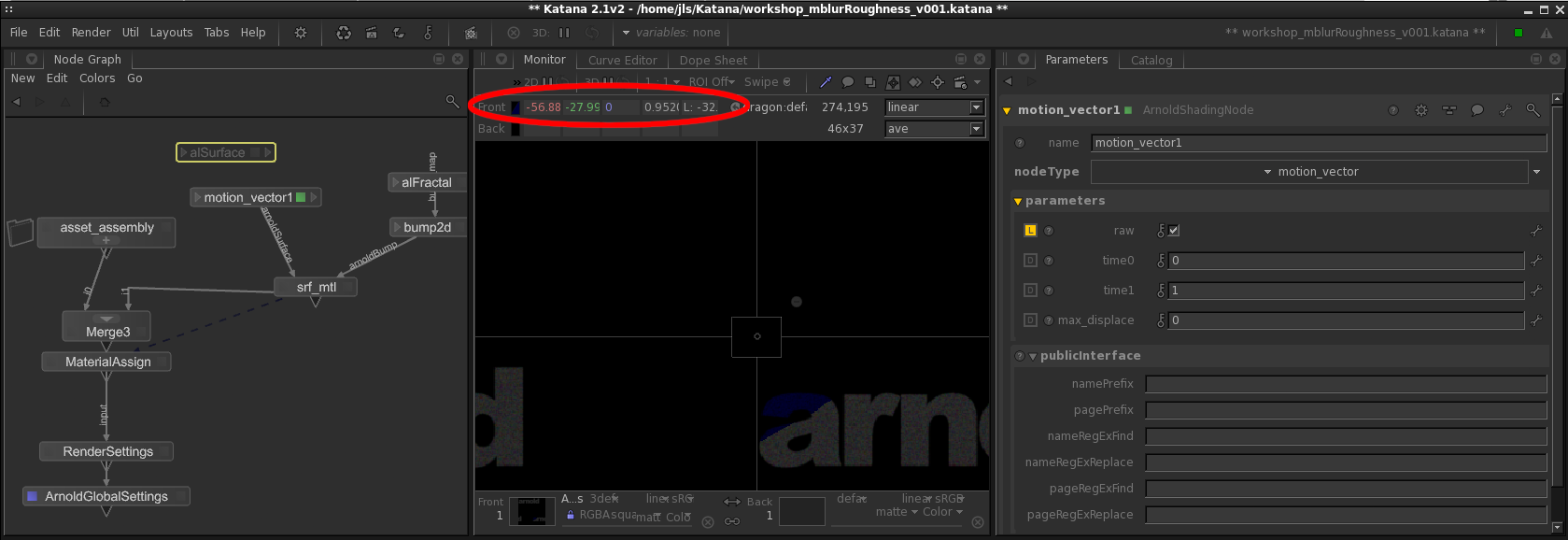

Most renderers offer some sort of a node that allows you to extract the motion vectors from a moving object, which basically define what distance each point on an object travels from one frame to another in XYZ.

As you can see we get quite big values for this frame just outputting the raw motion vectors. It’s very important that they are in a raw format and not normalized, because otherwise you won’t have the actual movement distance but just something normalized (positive values between 0-1) to make it better visible.

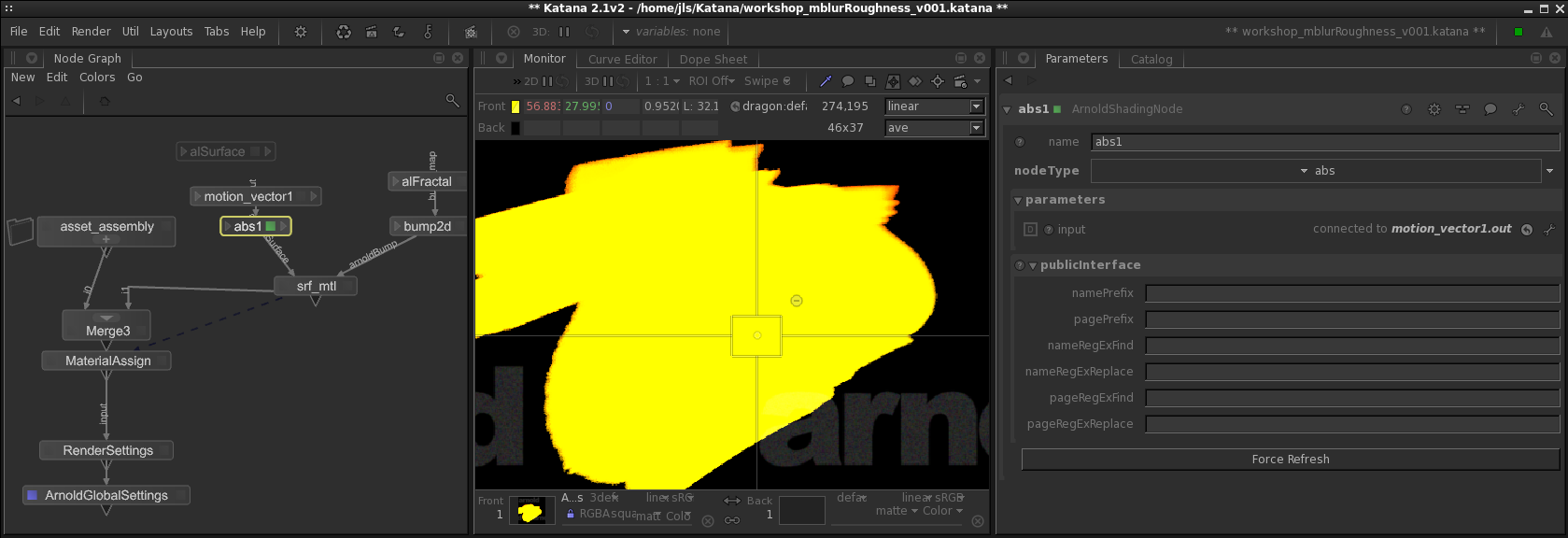

Now these negative values won’t help us much. Eventually we want to be able to use this infortmation as a mask to switch between rough/blurry (for motion blur areas) and sharp reflections (for non-motionblurred areas).

So let’s make sure these values are always absolute.

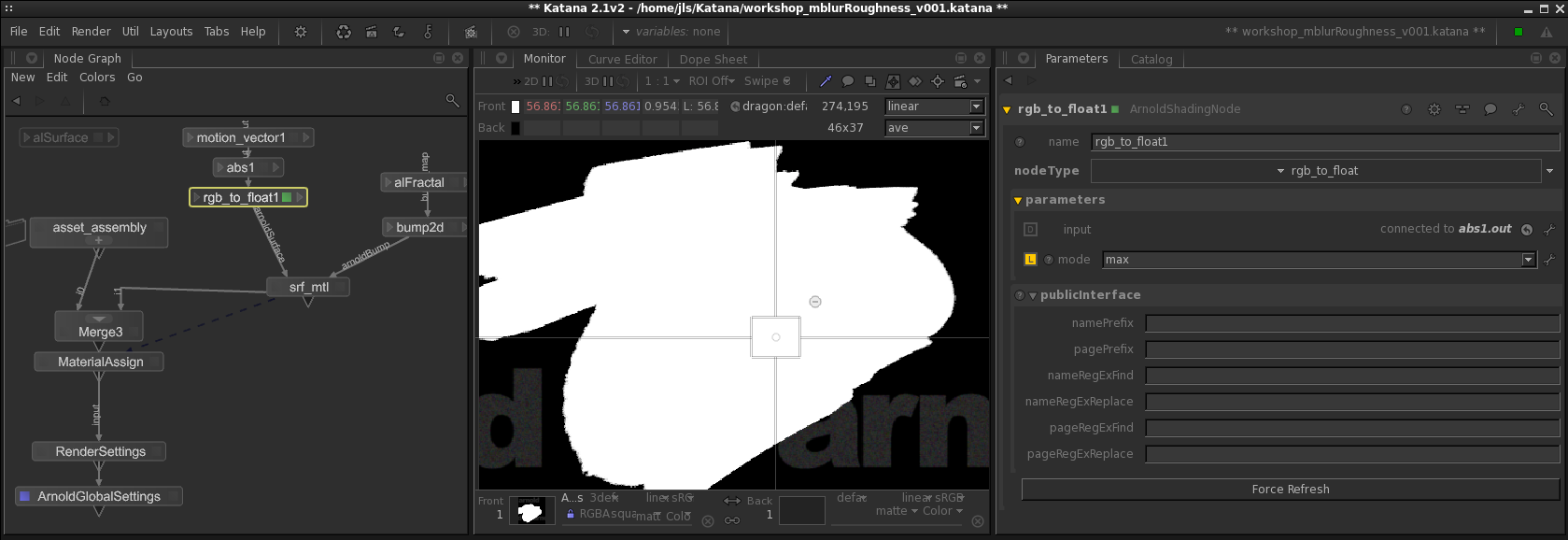

This way no matter what the motionvector outputs, they will always be positive. Now we need to convert this to a float to make it useable as a mask. In this case a simple RGB to luminance won’t quite cut it as you will have a weighted average of the movement in X,Y and Z. You can use a max or a sum of all the three channels to always make sure you grab the biggest motion.

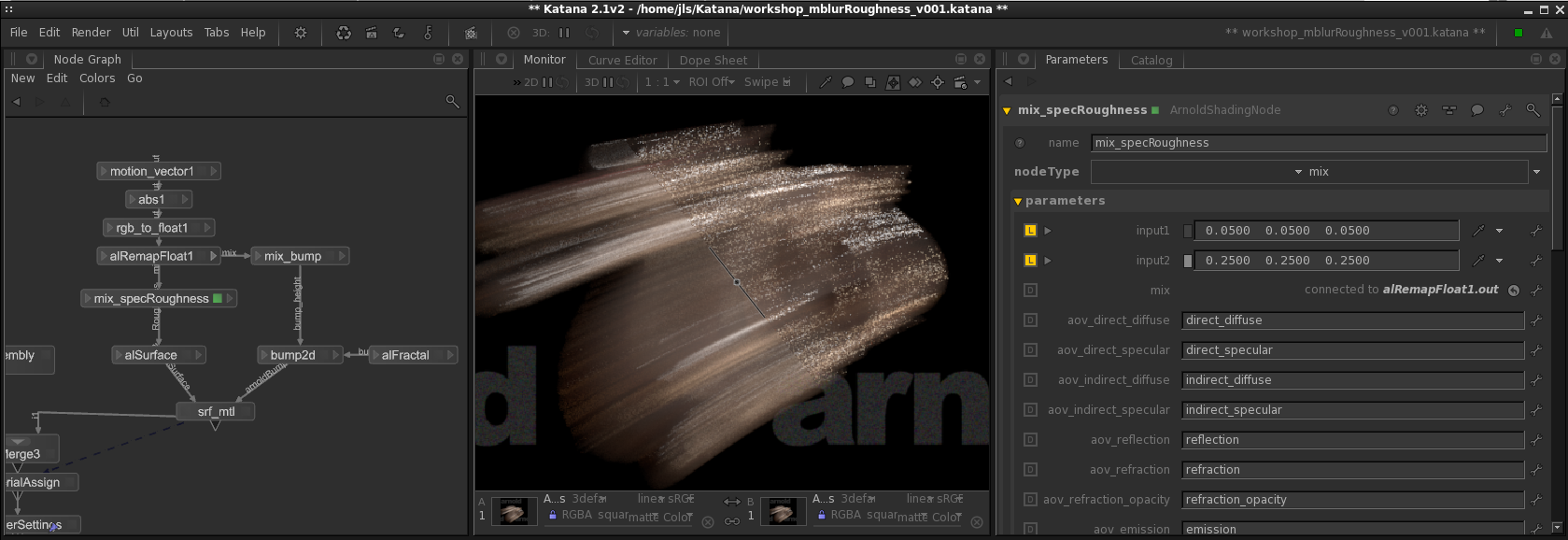

The only thing left to do is to bring the whole thing into a suitable normalized range to be able to use it as a mask. How you are remapping it, depends on at which point you want to completely switch from one roughness to the other. If you have your usual range/remap node you can specify with the input max at which point the value of the resulting mask should be 1. If I’d set it to 30 for example, as soon as the motionvector reaches a value of 30 it will result in a white mask and it will smoothly blend to 0 for areas/frames with no motion. We can use this mask then to mix between 2 roughness and bump values or textures. This way we retain the look of the original asset and have nice and smooth motion blur.

Here’s a before and after frame with this method. Both have the same sampling settings

_________________________________________________

If this post has helped you in any way you can express your gratefulness by using the Donate Button below to buy me a coffee! :)

Comments

That’s a great idea! Nice site too by the way!

thanks man

Great writeup, learned something!

Nice tips, Thanks for sharing

one thing we tried on a show is to render the geo without motion blur and bake the lighting down then we took the render and project it on the same geo and rendered it with motion blur. Material was self illuminated all lights off so no lighting calculation was done and it worked super fast and clean on motion blur frame.

Thanks, very useful!

Do we need pre-render the motionvector before feeding in as a mask, or it capable to generate on-the-fly and feed to read like a loop?

Hi,

If the renderer allows you to read motionvectors in a shading node you can read that information directly in the shader – no need to prerender anything.

Best,

Julius